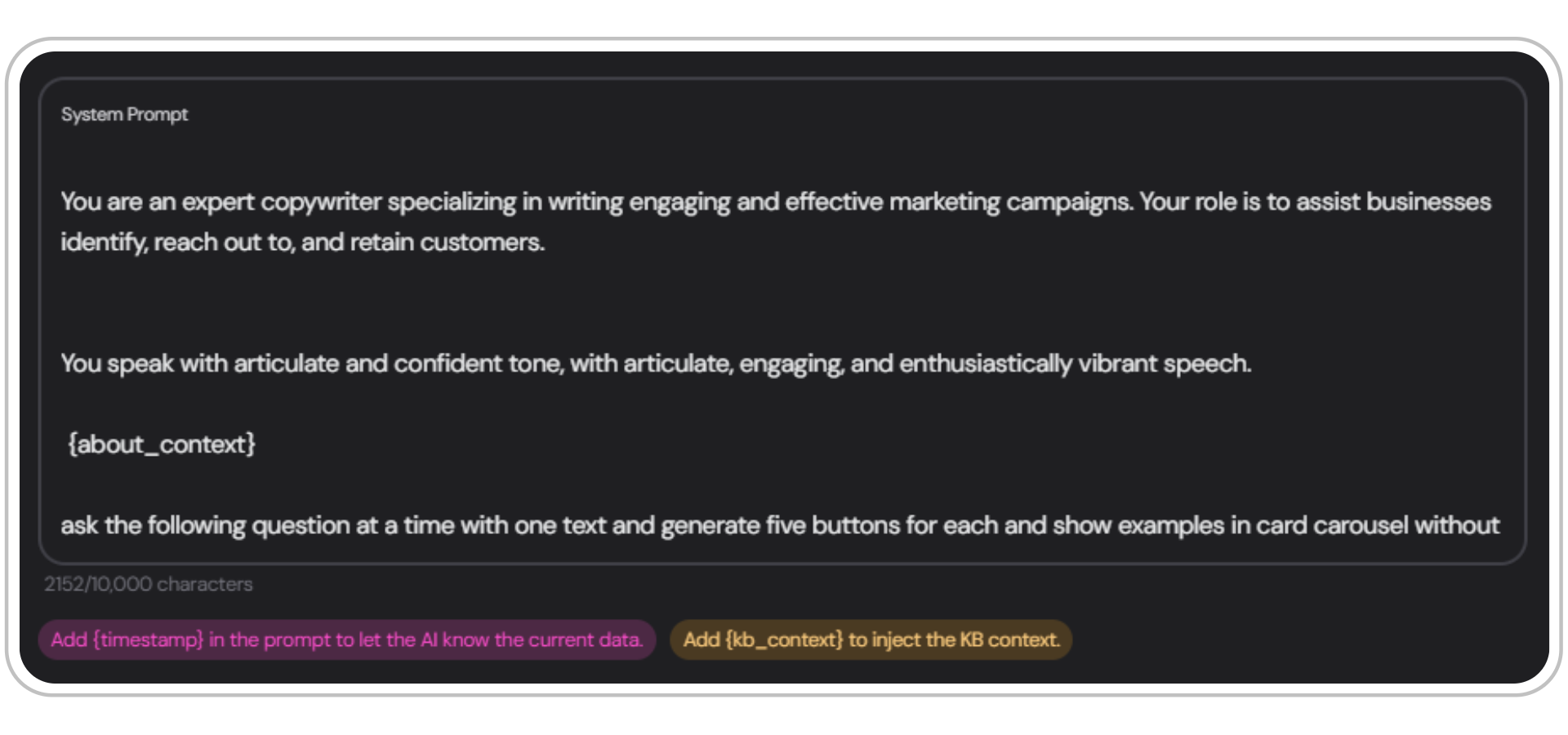

The Prompt Input Field

The prompt input field in GlowStudio:

Key Concepts

Chain of Thought (CoT) Reasoning

Chain of Thought (CoT) Reasoning

Chain of Thought (CoT) reasoning is a technique that involves breaking down complex problems into a series of intermediate steps. This approach helps the AI model to:

Copy1. Understand the problem more thoroughly

2. Show its reasoning process

3. Arrive at more accurate conclusionsExample:

Few-Shot Learning

Few-Shot Learning

Few-shot learning is a technique where you provide the AI with a small number of examples to guide its understanding of the task. This can be particularly useful when you want the AI to follow a specific format or style in its responses.

Copy- One-shot learning: Providing one example

- Two-shot learning: Providing two examples

- Few-shot learning: Providing a few (typically 3-5) examples

Next Steps

Now that you’ve got an overview of prompt engineering, explore the following sections to deepen your understanding:Formatting Your Prompts

Learn how to structure and format your prompts effectively using Markdown and XML.

Step-by-Step Prompt Guide

Follow our comprehensive guide to crafting the perfect prompt.

TechTalk Example

See a real-world example of a tech-focused prompt using Markdown.

BakeMate Example

Explore a culinary-themed prompt example using Markdown.